OOD Detection Benchmark

The OODDB (OOD Detection Benchmark) is a comprehensive benchmark suite designed to evaluate machine learning models performing Out-Of-Distribution Detection, with a specific focus on its semantic aspect (a.k.a. Semantic Novelty Detection). In this task, a model has access to a set of labeled samples (support set) that represents the known categories and it has to identify the test samples as either known (i.e., belonging to those same classes) or unknown (i.e., belonging to other unseen ones).

The benchmark includes datasets depicting different kind of subjects (generic objects, textures, scenes, aerial views), with multiple levels of granularity and across different visual domains.

In particular, it’s designed to test the models both in a cross-domain and intra-domain scenario (i.e., with or without a visual domain shift between the support and test distributions), aiming at

an evaluation which is solely centered on the semantic concepts represented by the datasets categories.

The cross-domain settings can be further divided into single-source or multi-source, depending on whether the labeled data samples belong to one or multiple domains.

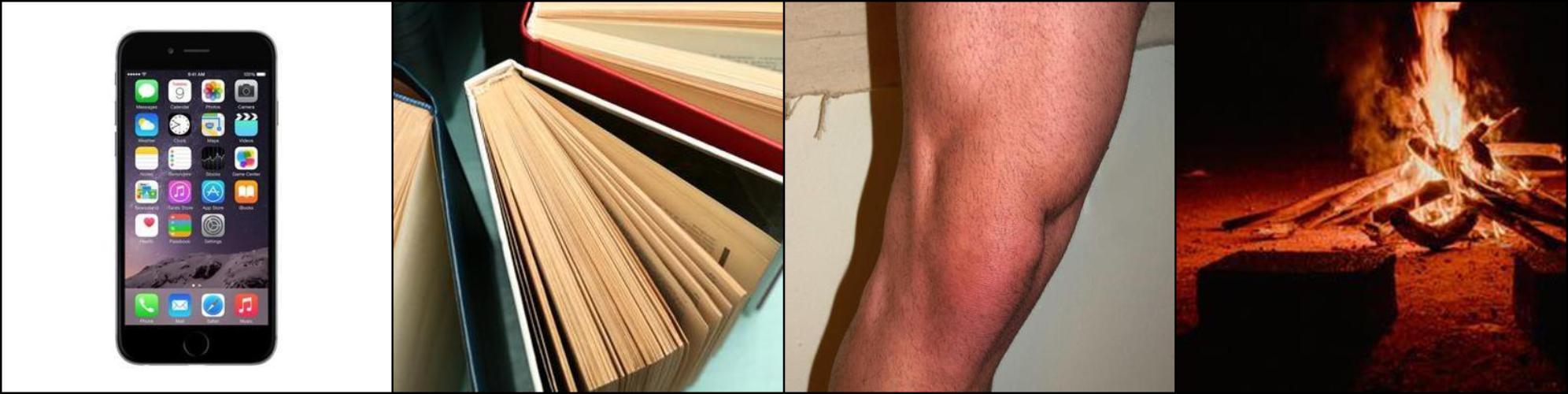

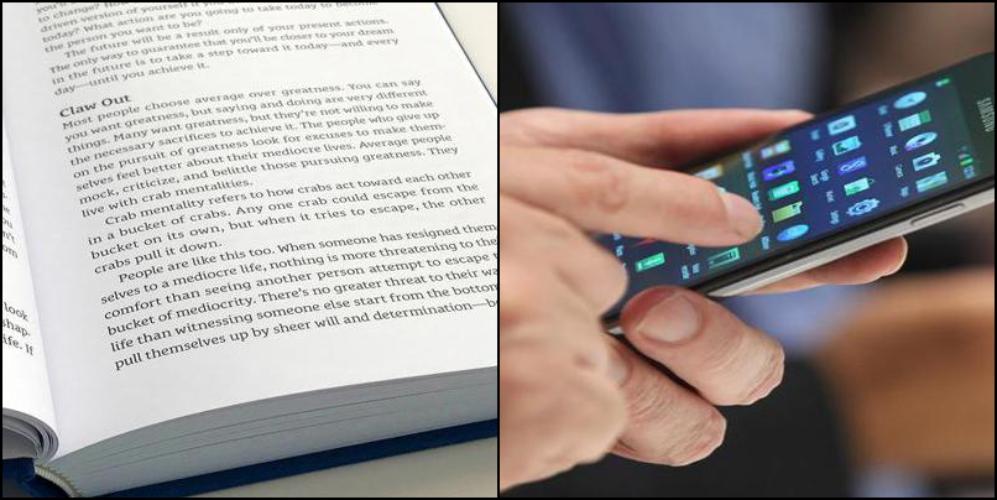

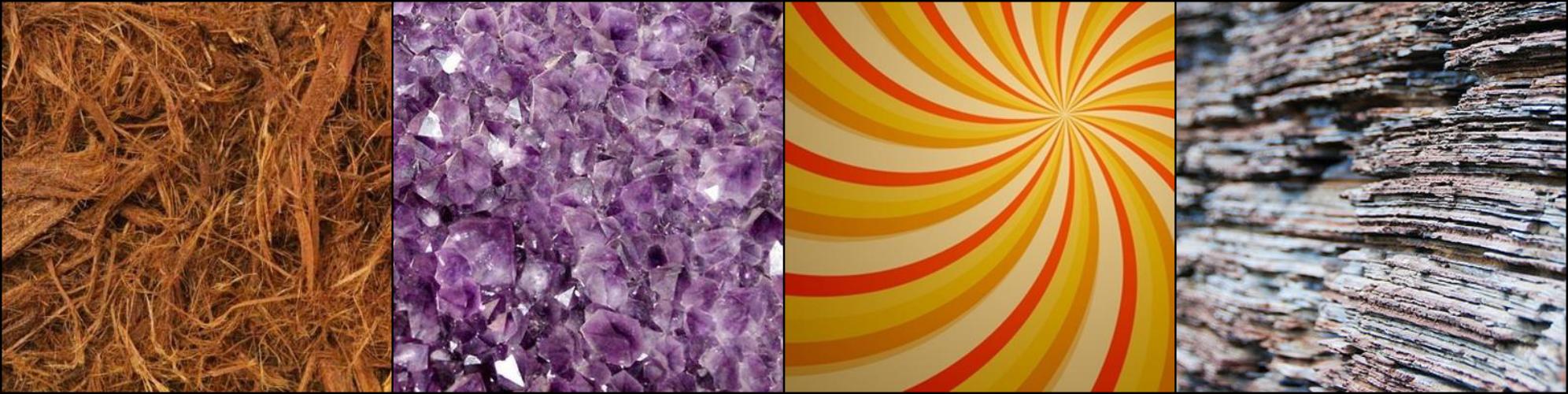

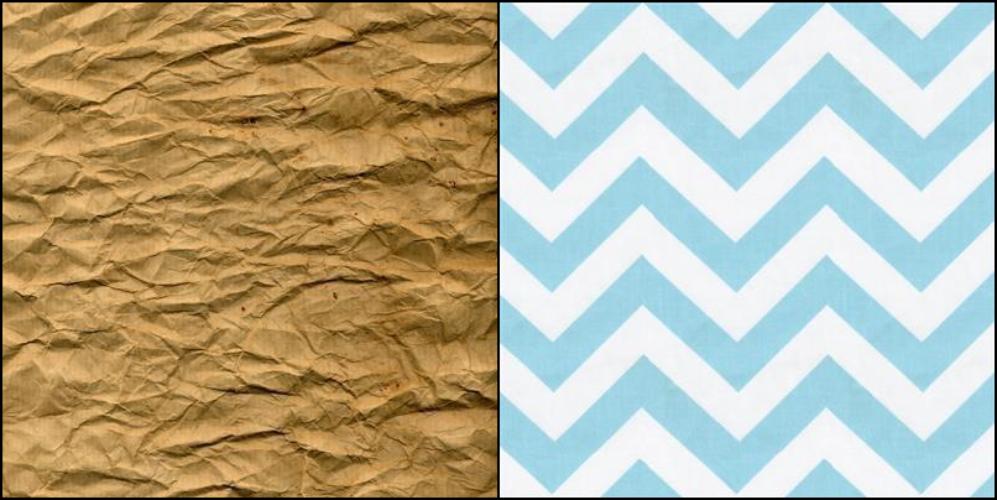

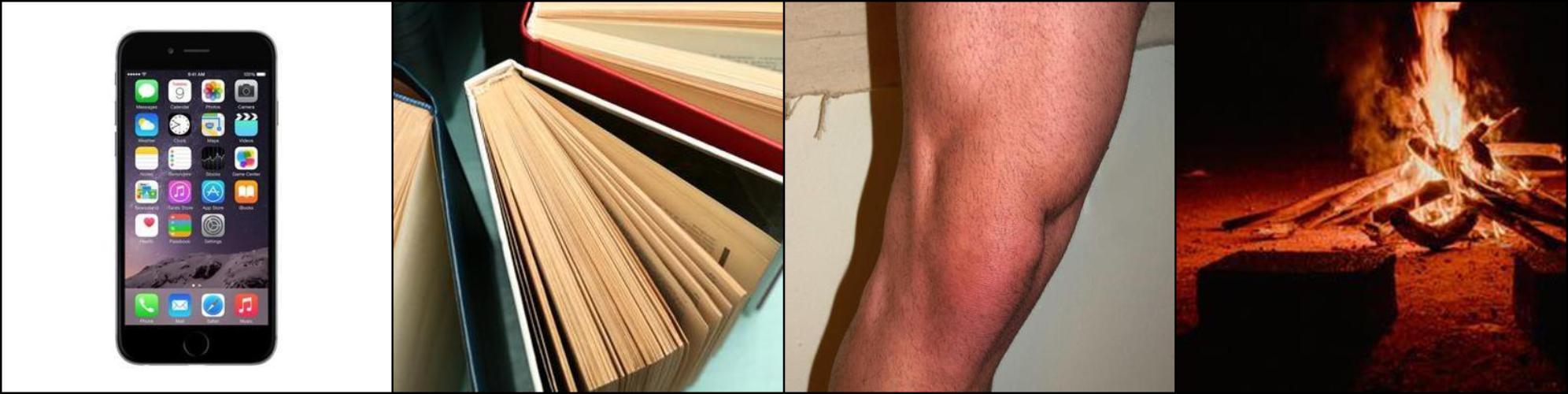

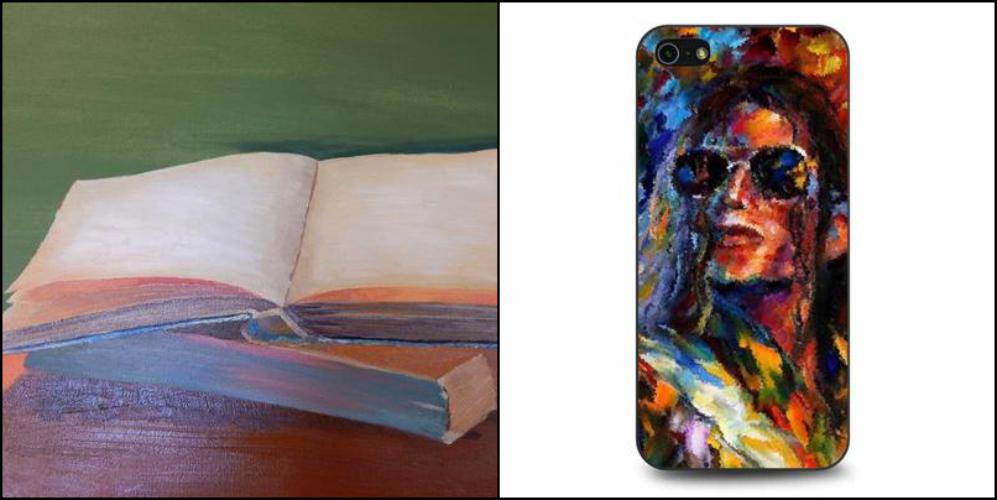

We report some visual examples for the supported tracks below (see Data for more details):

Intra-domain:

| Dataset | Train (Support) | Test Known | Test Unknown |

|---|---|---|---|

| DomainNet Real |  |

|

|

| DTD |  |

|

|

| PatternNet |  |

|

|

| Stanford Cars |  |

|

|

| SUN |  |

|

|

Cross-domain (single and multi-source):

| Dataset | Train (Support) | Test Known | Test Unknown |

|---|---|---|---|

| Real → Painting |  |

|

|

| All → Painting |  |

|

|

Data

The benchmark includes the following datasets:

- DomainNet

(paper ↗,

website ↗, per-domain downloads:

clipart,

infograph,

painting,

quickdraw,

real,

sketch):

intra-domain settings: 6

cross-domain settings: 36 (30 single-source and 6 multi-source) - DTD

(paper ↗,

website ↗,

download):

intra-domain settings: 1 - PatternNet

(paper ↗,

website ↗,

download ↗):

intra-domain settings: 1 - Stanford Cars

(paper ↗,

kaggle ↗,

download ↗):

intra-domain settings: 1 - SUN

(paper ↗,

website ↗,

download):

intra-domain settings: 1

We provide train and test splits for each dataset.

A train split represents the ID (in-distribution) data (i.e., the known classes), thus, it only contains a subset of the categories of the corresponding test split (which instead includes both ID and OOD samples).

More specifically, for each dataset we performed 3 different random divisions of its categories into known (ID) and unknown (OOD) ones. We refer to these 3 splits as data orders and we provide a train-test split for each one of them.

(In order to increase the statistical relevance of the results, it is advisable to

repeat the evaluation for all 3 data orders in the given setting and to average the obtained values.)

For more information, see Datasets.

Setup and Usage

You can easily access the splits and run the benchmark by following the instructions we provide on GitHub ↗.